Quick Summary:

In an era where data drives decisions and innovation, mastering the latest data engineering best practices is crucial. This blog delivers essential insights and actionable Data engineering strategies for 2025, ensuring your data pipelines are not only efficient but also future-proof. Dive in to discover how to enhance data quality, streamline processes, and maximize business value with cutting-edge techniques.

In this blog, we’re going to discuss📝

The software engineering world underwent a revolution with DevOps bridging silos between teams, enabling continuous delivery, and creating frameworks that could produce deployable software at any moment. Similarly, data engineering is on the cusp of its transformation.

The exponential growth of data volume, complexity, and demand for real-time insights has placed immense pressure on engineering teams to innovate. The solution lies in adopting modern best practices that mirror the success of software engineering methodologies. These practices promise to break down barriers, accelerate data product delivery, and ensure the reliability of pipelines at scale.

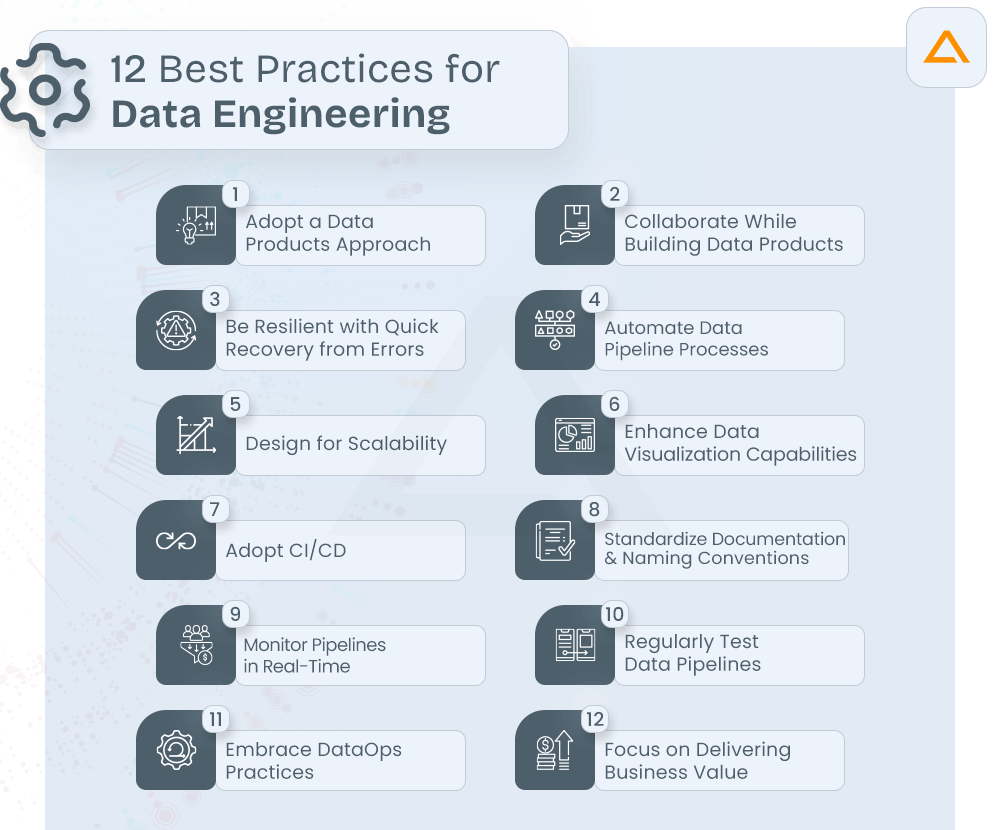

This blog outlines 12 key best practices for 2025 that will redefine data engineering, making your pipelines scalable, automated, and business-ready.

Best 12 Practices for Data Engineering

- Adopt a Data Products Approach

- Collaborate While Building Data Products

- Be Resilient with Quick Recovery from Errors

- Automate Data Pipeline Processes

- Design for Scalability

- Enhance Data Visualization Capabilities

- Adopt Continuous Integration and Continuous Delivery

- Standardize Documentation and Naming Conventions

- Monitor Pipelines in Real-Time

- Regularly Test Data Pipelines

- Embrace DataOps Practices

- Focus on Delivering Business Value

Data engineering is the backbone of any data-driven organization. Building effective data pipelines that are scalable, reliable, and efficient is crucial for extracting actionable insights and driving business growth. Below are some best practices to ensure your data pipelines are optimized for performance and long-term success.

1. Adopt a Data Products Approach

The concept of a data product is transformative: treating data as an end product rather than a by-product of business operations. A data product serves consumers (internal teams or external customers) by delivering actionable insights in an accessible format. We help our clients treat their data pipelines as products, each with defined SLAs, clear requirements, and a focus on continuous improvements.

Our data engineers ensure processes are in place to maintain data quality, including automated testing for completeness and freshness. We also align teams with KPIs that measure both pipeline availability and the relevance of the data itself.

Example:

- E-Commerce Retailer: The recommendation engine is a data product that regularly updates personalized product suggestions based on customer behavior, ensuring relevance and quality for a better shopping experience.

- Healthcare Organization: The patient insights dashboard aggregates and processes health data in real-time, offering actionable insights to doctors for more informed decision-making and enhanced patient care.

- Financial Services Firm: The fraud detection system analyzes transaction data in real-time, ensuring timely and accurate alerts. It’s continuously improved through defined SLAs and automated quality checks to maintain reliability.

2. Collaborate While Building Data Products

Data engineering thrives on collaboration across diverse teams, including data scientists, business analysts, and developers. Collaboration ensures that data pipelines are not only aligned with business objectives but also adaptable as needs evolve.

By integrating tools like Git for version control and Apache Airflow for workflow orchestration, teams can work independently while maintaining unified pipelines. This encourages regular feedback loops, ensuring alignment among stakeholders and optimizing the business value derived from the data products.

3. Be Resilient with Quick Recovery from Errors

No data pipeline is immune to errors, but high-performing teams distinguish themselves by their ability to recover quickly and efficiently. Building resilience means creating pipelines that can reprocess data without causing inconsistencies or duplication.

Integrating real-time monitoring systems like Datadog and Prometheus provides insights into pipeline health, and engineers can set up automated error detection and rollback mechanisms. Additionally, using Docker and Kubernetes helps isolate and resolve issues quickly without affecting production.

4. Automate Data Pipeline Processes

Automation is a critical aspect of modern data engineering. One of the ways to achieve this is by setting up data pipelines that automate the flow of data from source to destination. These pipelines are designed to minimize manual intervention and ensure data consistency. For more details on how to implement and build efficient pipelines, check out this guide on how to build data pipelines.

5. Design for Scalability

Scalability is a must as businesses grow and their data needs expand. It involves both technical and architectural considerations to ensure your pipeline can handle future increases in data volume.

Designing modular pipeline architectures where each stage: ingestion, transformation, and storage; can scale independently is essential. Leveraging distributed storage systems like Hadoop or Amazon S3, alongside compute engines like Apache Spark, ensures that pipelines can scale smoothly as data volumes increase. Simulating data bursts helps anticipate growth, addressing scaling challenges before they affect performance.

6. Enhance Data Visualization Capabilities

Data visualization tools play a pivotal role in making data accessible and actionable for various teams within an organization. Integrating the right visualization tools into your workflow not only enhances understanding but also ensures that insights are communicated effectively. For a comprehensive list of tools, explore this overview of the best data visualization tools available in the market.

7. Adopt Continuous Integration and Continuous Delivery (CI/CD)

CI/CD practices have revolutionized software engineering by enabling faster deployments with minimal risk. The same principles apply to data pipelines. Implementing CI/CD pipelines for data engineering automates tests for schema changes, data validation, and transformation logic.

Platforms like Jenkins or GitLab allow for automated deployment workflows, ensuring every change is tested and validated before going live. This process ensures frequent updates can happen safely without disrupting operations.

8. Standardize Documentation and Naming Conventions

Consistency is key in any engineering discipline, especially in data engineering. Clear naming conventions for datasets, pipelines, and scripts ensure that everyone in the team can easily understand and work with the system.

Comprehensive documentation including pipeline architecture, data transformations, and troubleshooting steps supports both current team members and new hires. Standardization is critical for smoother team collaboration and faster onboarding.

9. Monitor Pipelines in Real-Time

Pipelines require constant monitoring to maintain efficiency and avoid issues that can disrupt operations.

Real-time monitoring platforms like Apache Superset and Grafana visualize pipeline performance metrics. These platforms, combined with automated alerts, allow for proactive identification and resolution of issues before they escalate, ensuring a smooth, continuous operation.

10. Regularly Test Data Pipelines

Testing is vital to the reliability of data pipelines. Not only should unit tests be conducted, but integration and performance testing must be emphasized to ensure that pipelines can handle real-world data loads.

Tools like dbt (Data Build Tool) help automate tests for data models, validating schema integrity, uniqueness, and overall data quality. This process ensures that data remains accurate and the pipelines perform optimally under all conditions.

11. Embrace DataOps Practices

DataOps, similar to DevOps, is a methodology focused on automating, collaborating, and continuously improving data engineering processes.

Integrating DataOps principles helps streamline the journey from data ingestion to actionable insights. By automating tasks such as pipeline deployments and enabling self-service analytics, teams can respond more quickly to changing business needs while reducing the workload of routine tasks.

12. Focus on Delivering Business Value

Every decision made in data engineering should have a direct connection to business goals. By closely collaborating with stakeholders, we identify the key metrics and goals that will drive actionable business insights. Whether it’s developing real-time dashboards or predictive models, the focus is always on creating data-driven solutions that lead to measurable business value.

Hey!!

Looking for Data Engineering Service?

Revolutionize Your Data Infrastructure with Cutting-Edge Engineering Services from Aglowid IT Solutions!

Emerging Trends and the Future of Data Engineering

As data engineering evolves, staying ahead of emerging trends and interventions is crucial for maintaining a competitive edge. Here are some of the key developments shaping the future of data engineering:

AI-Driven Data Engineering

Artificial Intelligence (AI) is transforming data engineering by automating complex tasks and enhancing predictive analytics. AI algorithms can optimize data pipelines, identify anomalies, and provide actionable insights with greater accuracy and efficiency.

Real-Time Data Processing

The demand for real-time data processing is increasing as businesses require instant insights to make timely decisions. Technologies like Apache Kafka and stream processing frameworks are becoming essential for handling live data flows and providing real-time analytics.

Serverless Data Architecture

Serverless computing is gaining traction in data engineering due to its ability to simplify infrastructure management. Serverless data solutions, such as AWS Lambda and Google Cloud Functions, allow for automatic scaling and reduced operational overhead, enabling more agile data operations.

Data Privacy and Security Enhancements

With growing concerns over data privacy and security, new tools and regulations are emerging to protect sensitive information. Data engineering practices are increasingly incorporating advanced encryption methods, privacy-preserving techniques, and compliance with regulations like GDPR and CCPA.

Quantum Computing

Although still in its early stages, quantum computing promises to revolutionize data engineering by solving complex problems faster than traditional computers. As quantum technologies advance, they could significantly impact data processing capabilities and algorithms.

Edge Computing

Edge computing is emerging as a key trend for processing data closer to its source. As per Statista, the worldwide edge computing market is estimated to reach 350 billion US dollar mark by 2027. This approach reduces latency and bandwidth usage, making it ideal for IoT applications and real-time analytics in environments with limited connectivity. For a deeper dive into how edge computing compares with cloud computing and its impact on data engineering, check out this insightful blog on Edge Computing vs. Cloud Computing

These trends highlight the dynamic nature of data engineering and underscore the importance of staying updated with the latest innovations to leverage new opportunities and drive business success.

How Aglowid Can Help: Data Engineering Services & Engineers for Hire

We offer expert data engineering services and highly skilled data engineers for hire to help you build scalable data systems and pipelines for 2025. Whether you need full-scale solutions or additional engineering support, we provide flexible options tailored to your needs.

Avail Our Data Engineering Services to get:

- Data Pipeline Development: Build scalable, high-performance pipelines.

- Data Integration: Seamless data flow across systems.

- Real-Time Processing: Actionable insights with real-time data processing.

- Infrastructure Setup: Robust, scalable cloud architectures.

- Data Quality Assurance: Automated testing for data integrity.

Hire Data Engineers with expertise in:

- Data pipeline design and optimization

- Data modeling and architecture

- Cloud infrastructure and warehousing

- Machine learning pipelines

- Data security and governance

With Aglowid, you access top-tier talent to boost efficiency and reliability in your data projects.